Continuing my quest, playing with old computer science theories today i am writing about recursion and using it to solve similar issue as that of Knapsack problem. So, if you know about Recursion (like some flavor of it which i discussed in my last post) you would know its a very powerful tool which can be used to solve complex computer science problems.

In this approach we call same method from within a method with a base case which marks as its end, so we effectively call our self till we reach a base case and then we trace back till the starting. In java when a method call is placed in code it is loaded on to a stack in JVM which the run time environment will call, so if i call my self again and again i will end up having several stack entries of the same method and when the base case returns something it will unfold with last in last out rule.

As it may seem this is a powerful yet not that efficient tool as the repeated method calls can be expensive.

So, let's take a sample problem which is similar to Knapsack Problem remember Knapsack problem is a NP-complete problem hence no optimal solution exists for it. Please note this exercise is to understand and practice recursion not to get the optimal solution to Knapsack Problem.

Problem

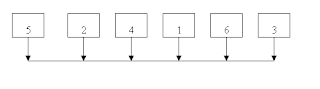

We have a array of descending numbers and we need to pick a set whose total is equal to the target.

E.g. array = 11, 8, 7, 6 ,5

Target Total = 20

We will create a program that can calculate the first set of numbers from this to get the result.

References :

Data Structures and Algorithms by Robert Lafore

In this approach we call same method from within a method with a base case which marks as its end, so we effectively call our self till we reach a base case and then we trace back till the starting. In java when a method call is placed in code it is loaded on to a stack in JVM which the run time environment will call, so if i call my self again and again i will end up having several stack entries of the same method and when the base case returns something it will unfold with last in last out rule.

As it may seem this is a powerful yet not that efficient tool as the repeated method calls can be expensive.

So, let's take a sample problem which is similar to Knapsack Problem remember Knapsack problem is a NP-complete problem hence no optimal solution exists for it. Please note this exercise is to understand and practice recursion not to get the optimal solution to Knapsack Problem.

Problem

We have a array of descending numbers and we need to pick a set whose total is equal to the target.

E.g. array = 11, 8, 7, 6 ,5

Target Total = 20

We will create a program that can calculate the first set of numbers from this to get the result.

public class KnapsackProblem {

private Stackx output;

private int[] input;

private int size;

private int target;

public KnapsackProblem(int target){

this.input = new int[10];

this.size = 0;

this.output = new Stackx(10);

this.target = target;

}

public void insert(int item) {

input[size++] = item;

}

public void recKnapsack() {

boolean flag = false;

for (int i = 0; i < size; i++) {

if (input[i] == target) {

System.out.println("got it !");

} else if (input[i] > target) {

continue;

} else if (input[i] < target) {

boolean result = knapsackNew(target, i);

if (result) {

output.display();

flag = true;

break;

} else{

while(!output.isEmpty()){

output.pop();

}

}

}

}

if (flag) {

System.out.println(" Success !!");

} else {

System.out.println("Cant find any conbination to get the target: " + target);

}

}

public boolean knapsackNew(int target, int i) {

if (i == size) {

return false;

}

if (input[i] < target) {

output.push(input[i]);

return knapsackNew(target - input[i], ++i);

} else if (input[i] > target) {

return knapsackNew(target, ++i);

} else if (input[i] == target) {

output.push(input[i]);

return true;

}

return false;

}

public static void main(String[] args) {

KnapsackProblem obj = new KnapsackProblem(20);

obj.insert(11);

obj.insert(8);

obj.insert(7);

obj.insert(6);

obj.insert(5);

obj.recKnapsack();

}

}

References :

Data Structures and Algorithms by Robert Lafore